-

方法三:

import urllib.request import http.cookiejar cj = http.cookiejar.CookieJar() opener = urllib.request.build_opener(urllib.request.HTTPCookieProcessor(cj)) urllib.request.install_opener(opener) response3 = urllib.request.urlopen('https://www.baidu.com/') print(response3.getcode()) print(len(response3.read()))查看全部 -

方法二:

import urllib.request req = urllib.request.Request('https://www.baidu.com/') req.add_header('User-Agent','Mozilla/5.0') response = urllib.request.urlopen(req) print(response.getcode()) print(response) cont = response.read() print(cont)查看全部 -

方法一: import urllib.request response = urllib.request.urlopen('https://www.baidu.com/') print(response.getcode()) cont = response.read() print(cont)查看全部 -

断查看全部

-

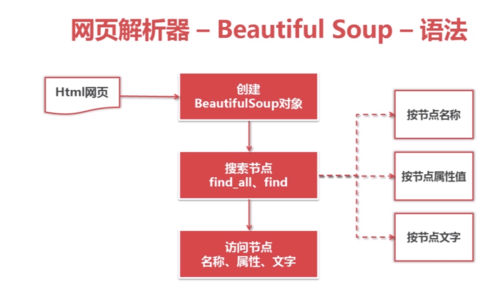

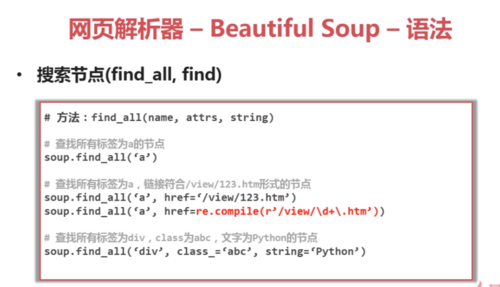

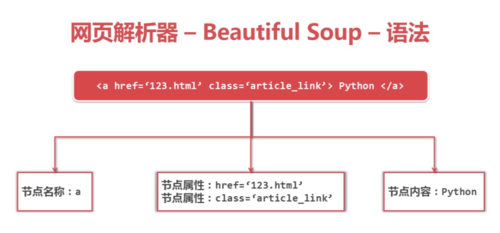

解析器bs4:

查看全部

查看全部 -

# -*- coding: utf-8 -*- from bs4 import BeautifulSoup #导入网页解析器BeautifulSoup库 import re #导入正则表达式库 html_doc = """ <html><head><title>The Dormouse's story</title></head> <body> <p class="title"><b>The Dormouse's story</b></p> <p class="story">Once upon a time there were three little sisters; and their names were <a href="http://example.com/elsie" class="sister" id="link1">Elsie</a>, <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; and they lived at the bottom of a well.</p> <p class="story">...</p> """ # 1、创建BeautifulSoup对象 soup = BeautifulSoup(html_doc, # HTML文档字符串 'html.parser', # HTML解析器 from_encoding='utf-8' # HTML文档的编码 ) # 2、搜索节点(find_all, find) print ('获取所有的链接') links = soup.find_all('a') for link in links: # 3、访问节点内容 print (link.name, link['href'], link.get_text()) print ('获取Lacie的链接') link_node = soup.find('a', href='http://example.com/lacie') print (link_node.name, link_node['href'], link_node.get_text()) print ('正则匹配') link_node = soup.find('a', href=re.compile(r"ill")) print (link_node.name, link_node['href'], link_node.get_text()) print ('获取p段落文字') link_node = soup.find('p', class_="title") print (link_node.name, link_node.get_text())查看全部 -

python爬虫简单查看全部

-

url管理器

查看全部 -

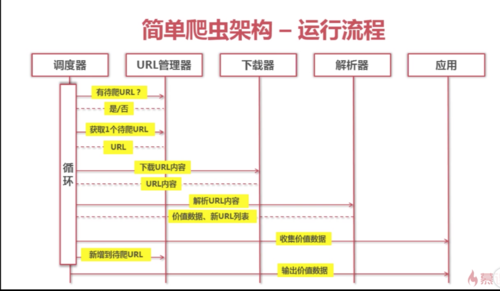

简单爬虫架构--运行流程

查看全部

查看全部 -

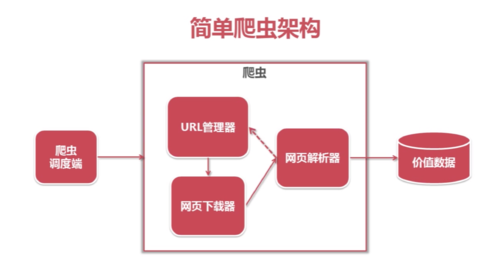

简单爬虫架构

查看全部

查看全部 -

import urlleb2查看全部

-

200表示成功查看全部

-

爬虫简介

简单爬虫架构

URL管理器

网页下载器(urllib2)

网页解析器(BeautifulSoup)

完整示例 ·爬取百度百科Python词条相关的1000个页面数据

查看全部 -

import urllib2,cookielib cj = cookielib.CookieJar() opener = urllib2.build_opener(urllib2.HTTPCookieProcessor(cj)) urllib2.install_opener(opener) response = urllib2.urlopen("http://www.baidu.com/")查看全部 -

import urllib2 request = urllib2.Request(url) request.add_data('a','1') request.add_header('User-Agent','mozilla/5.0') response = urllib2.urlopen(request)查看全部

举报

0/150

提交

取消