2 回答

TA贡献1828条经验 获得超6个赞

要获得所需的 DataFrame,您可以这样做:

import requests

import pandas as pd

from bs4 import BeautifulSoup

url = 'https://www.tennisexplorer.com/player/paire-4a33b/'

soup = BeautifulSoup( requests.get(url).content, 'html.parser' )

all_data = []

for row in soup.select('#matches-2020-1-data tr:not(:has(th))'):

tds = [td.get_text(strip=True, separator=' ') for td in row.select('td')]

all_data.append({

'tournament': row.find_previous('tr', class_='head flags').find('td').get_text(strip=True),

'date': tds[0],

'match_player_1': tds[2].split('-')[0].strip(),

'match_player_2': tds[2].split('-')[-1].strip(),

'round': tds[3],

'score': tds[4]

})

df = pd.DataFrame(all_data)

df.to_csv('data.csv')

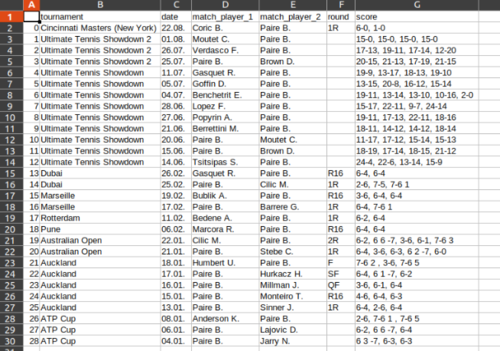

保存data.csv(来自 LibreOffice 的屏幕截图):

TA贡献1836条经验 获得超5个赞

尝试一下:

import pandas as pd

url = "https://www.tennisexplorer.com/player/paire-4a33b/"

df = pd.read_html(url)[8]

new_data = {"tournament":[], "date":[], "match_player_1":[], "match_player_2":[],

"round":[], "score":[]}

for index, row in df.iterrows():

try:

date = float(row.iloc[0][:-1])

new_data["tournament"].append(tourn)

new_data["date"].append(row.iloc[0])

new_data["match_player_1"].append(row.iloc[2].split("-")[0])

new_data["match_player_2"].append(row.iloc[2].split("-")[1])

new_data["round"].append(row.iloc[3])

new_data["score"].append(row.iloc[4])

except Exception as e:

tourn = row.iloc[0]

data = pd.DataFrame(new_data)

添加回答

举报