import urllib.request # Python之后urllib2改为urllib.request

print("方法一")

url = "https://dxy.com/faq"

response_1 = urllib.request.urlopen(url)

print(response_1.getcode()) # 截取状态码,是200则成功

# print(response_1.read()) # 未解码

print(len(response_1.read()))

print("\n方法二")

request = urllib.request(url) # 错误一

request.add_header("user-agent","Mozilla/5.0 ") # 将爬虫伪装成浏览器

response_2 = urllib.request.urlopen(request)

print(response_2.getcode())

print(len(response_2.read()))

print("\n方法三")

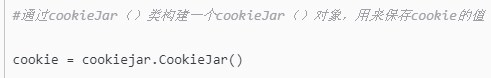

cj = cookielib.CookieJar() # 错误二

opener = urllib.request.build_opener(urllib.request.HTTPCookieProcessor(cj))

urllib.request.install_opener(opener)

response_3 = urllib.request.urlopen(url)

print(response_3.getcode()) # 截取状态码,是200则成功

print cj

print(len(response_3.read()))