本文首发:编码如写诗。

在国产化进程不断推进的背景下,基于鲲鹏处理器(Kunpeng-920) 和 银河麒麟 V10 操作系统的 K8s 集群部署需求日益增长。由于国内部分环境无法直接访问互联网,因此离线部署成为了一种常见的方式。

本文将详细介绍如何在银河麒麟 V10(Kylin Linux Advanced Server V10)系统上进行 K8s(v1.23.17)及其相关组件 KubeSphere(v3.4.1)和 Harbor(v2.7.1)的离线部署,包括 Docker 安装、Harbor 私有仓库搭建、K8s 集群安装及镜像推送 等关键步骤,适用于 ARM64 架构环境。

1. 环境及软件版本

环境涉及软件版本信息

- 服务器芯片: Kunpeng-920

- 操作系统:麒麟 V10 SP2 aarch64

- Docker: 24.0.7

- Harbor: v2.7.1

- Kubernetes:v1.23.17

- KubeSphere:v3.4.1

- KubeKey: v3.1.5

服务器基本信息

[root@0003 k8s-init]# uname -a

Linux 0003.novalocal 4.19.90-24.4.v2101.ky10.aarch64 #1 SMP Mon May 24 14:45:37 CST 2021 aarch64 aarch64 aarch64 GNU/Linux

[root@0003 k8s-init]# cat /etc/os-release

NAME="Kylin Linux Advanced Server"

VERSION="V10 (Sword)"

ID="kylin"

VERSION_ID="V10"

PRETTY_NAME="Kylin Linux Advanced Server V10 (Sword)"

ANSI_COLOR="0;31"

2. 说明

本文只演示离线部署过程,离线制品和其他安装包可查看之前文章自己制作,也可添加作者微信:sd_zdhr获取。

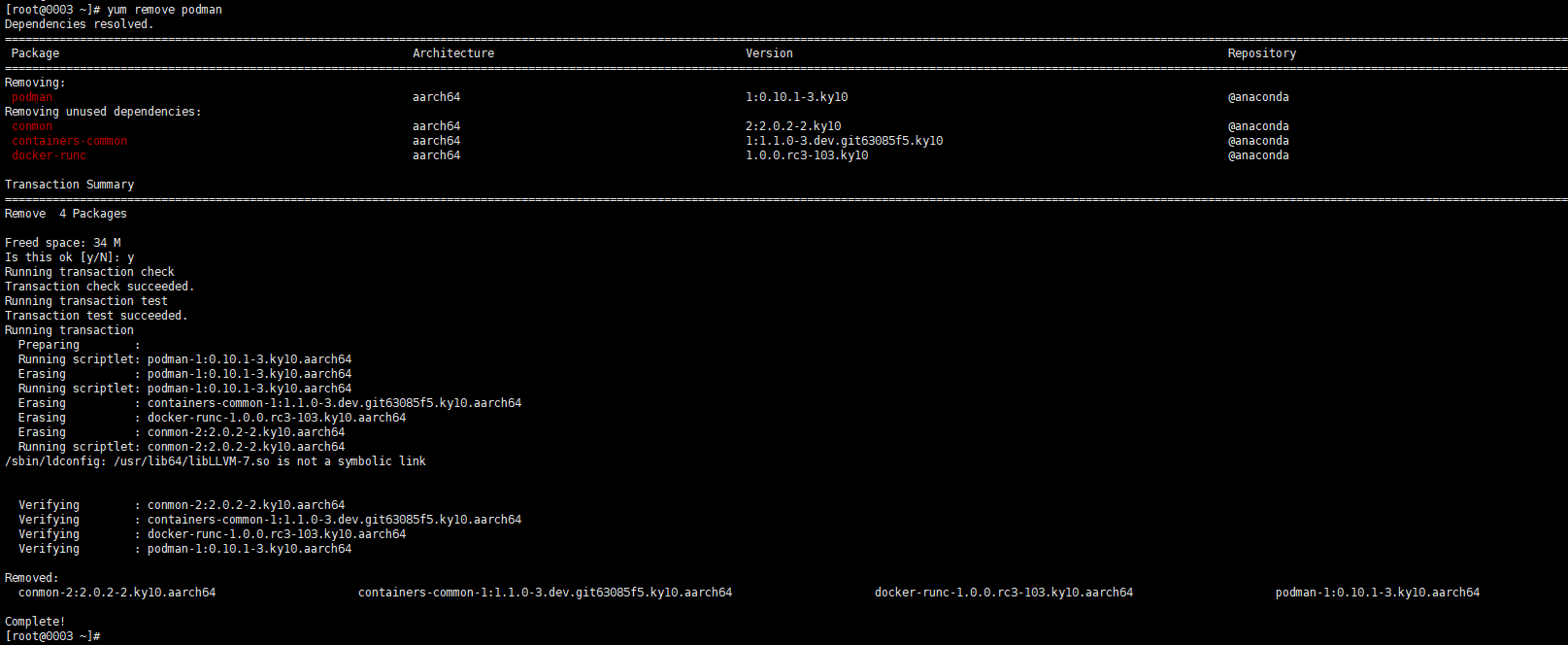

3. 移除麒麟系统自带的 Podman

麒麟系统自带 Podman 作为容器引擎,但它可能会与 Docker 发生冲突,导致 CoreDNS、NodeLocalDNS 失败,或者引发 Docker 权限相关问题。因此,建议直接卸载:

yum remove podman

4. 将安装包拷贝至离线环境

将下载的 KubeKey 、制品(artifact)、脚本和导出的镜像通过 U 盘等介质拷贝至离线环境安装节点。

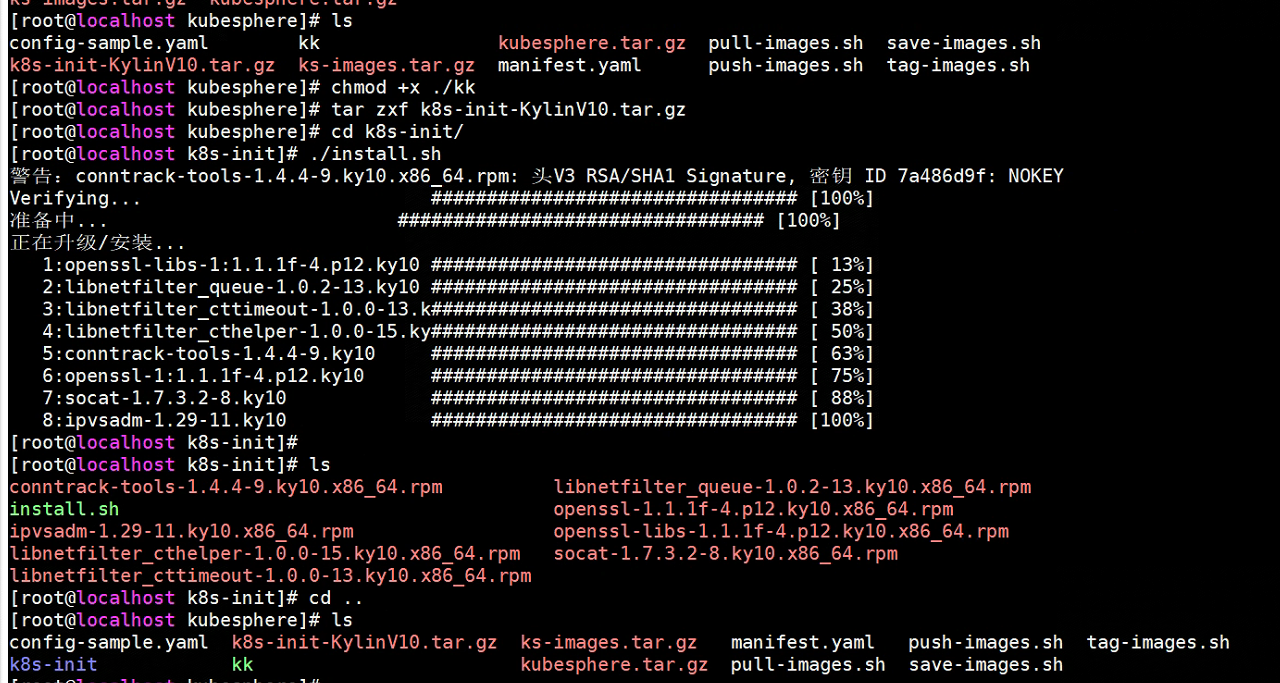

5. 安装 K8s 依赖包

在所有 K8s 节点执行以下操作:

- 上传

k8s-init-KylinV10.tar.gz至服务器。 - 解压后执行

install.sh。

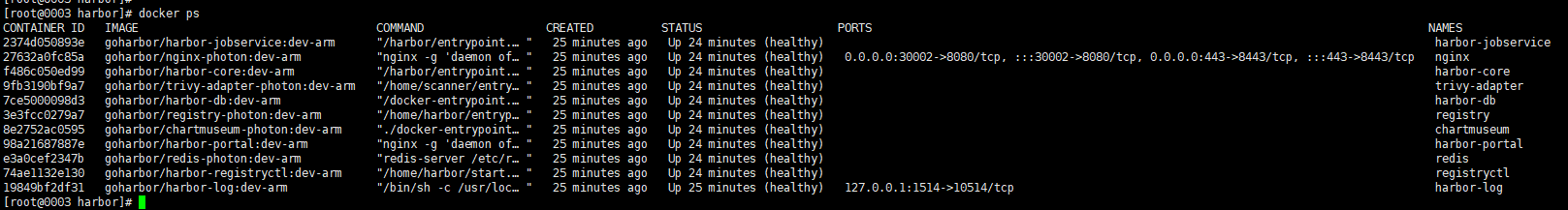

6. 安装 Harbor 私有仓库

由于官方 Harbor 并未提供 ARM 版本的安装包,KubeKey 也无法自动安装,因此需要手动安装。

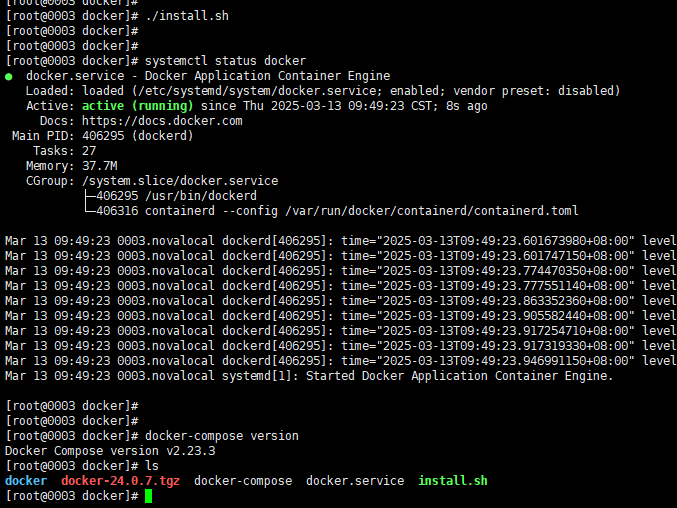

安装 Docker 及 Docker-Compose

安装包:Docker

解压后执行其中的install.sh。

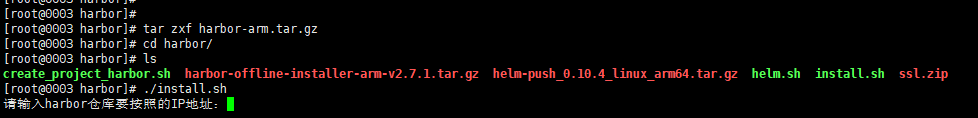

安装 Harbor

安装包: Harbor

解压后执行其中的install.sh。

安装过程中需要输入 Harbor 私有仓库 IP,等待安装完成。

- 创建 Harbor 中的项目

vim create_project_harbor.sh

#!/usr/bin/env bash

# Copyright 2018 The KubeSphere Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

url="https://dockerhub.kubekey.local" #修改url的值为https://dockerhub.kubekey.local

user="admin"

passwd="Harbor12345"

harbor_projects=(

kubesphereio

kubesphere

)

for project in "${harbor_projects[@]}"; do

echo "creating $project"

curl -u "${user}:${passwd}" -X POST -H "Content-Type: application/json" "${url}/api/v2.0/projects" -d "{ \"project_name\": \"${project}\", \"public\": true}" -k #curl命令末尾加上 -k

done

脚本授权后,执行脚本创建 ./create_project_harbor.sh

7. 修改 config-sample.yaml 配置文件

此文件主要用于定义 K8s 集群的配置,包括 Harbor 私有仓库、K8S 版本、网络插件、存储配置等。

- 必须指定

registry仓库部署节点(用于 KubeKey 部署自建 Harbor 仓库)。 registry里指定 不再指定type类型为harbor,默认安装 docker registry,harbor官方不支持arm。需要安装的话可以自行安装或者部署完ks后(卸载docker registry)再安装

纯K8s

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: node1, address: 192.168.200.7, internalAddress: "192.168.200.7", user: root, password: "123456",arch: arm64}

roleGroups:

etcd:

- node1 # All the nodes in your cluster that serve as the etcd nodes.

master:

- node1

# - node[2:10] # From node2 to node10. All the nodes in your cluster that serve as the master nodes.

worker:

- node1

registry:

- node1

controlPlaneEndpoint:

# Internal loadbalancer for apiservers. Support: haproxy, kube-vip [Default: ""]

internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

system:

ntpServers:

- node1 # 所有节点同步node1时间.

timezone: "Asia/Shanghai"

kubernetes:

version: v1.25.16

containerManager: docker

clusterName: cluster.local

# Whether to install a script which can automatically renew the Kubernetes control plane certificates. [Default: false]

autoRenewCerts: true

# maxPods is the number of Pods that can run on this Kubelet. [Default: 110]

maxPods: 210

etcd:

type: kubekey

## caFile, certFile and keyFile need not be set, if TLS authentication is not enabled for the existing etcd.

# external:

# endpoints:

# - https://192.168.6.6:2379

# caFile: /pki/etcd/ca.crt

# certFile: /pki/etcd/etcd.crt

# keyFile: /pki/etcd/etcd.key

dataDir: "/var/lib/etcd"

heartbeatInterval: 250

electionTimeout: 5000

snapshotCount: 10000

autoCompactionRetention: 8

metrics: basic

quotaBackendBytes: 2147483648

maxRequestBytes: 1572864

maxSnapshots: 5

maxWals: 5

logLevel: info

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

multusCNI:

enabled: false

storage:

openebs:

basePath: /var/openebs/local # base path of the local PV provisioner

registry:

type: harbor

registryMirrors: []

insecureRegistries: []

privateRegistry: "dockerhub.kubekey.local"

namespaceOverride: "kubesphereio"

auths: # if docker add by `docker login`, if containerd append to `/etc/containerd/config.toml`

"dockerhub.kubekey.local":

username: "admin"

password: Harbor12345

skipTLSVerify: true # Allow contacting registries over HTTPS with failed TLS verification.

plainHTTP: false # Allow contacting registries over HTTP.

certsPath: "/etc/docker/certs.d/dockerhub.kubekey.local" # Use certificates at path (*.crt, *.cert, *.key) to connect to the registry.

addons: [] # You can install cloud-native addons (Chart or YAML) by using this field.

带KubeSphere

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample

spec:

hosts:

- {name: node1, address: 192.168.200.7, internalAddress: "192.168.200.7", user: root, password: "123456"}

roleGroups:

etcd:

- node1 # All the nodes in your cluster that serve as the etcd nodes.

master:

- node1

# - node[2:10] # From node2 to node10. All the nodes in your cluster that serve as the master nodes.

worker:

- node1

registry:

- node1

controlPlaneEndpoint:

# Internal loadbalancer for apiservers. Support: haproxy, kube-vip [Default: ""]

internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

system:

ntpServers:

- node1 # 所有节点同步node1时间.

timezone: "Asia/Shanghai"

kubernetes:

version: v1.25.16

containerManager: docker

clusterName: cluster.local

# Whether to install a script which can automatically renew the Kubernetes control plane certificates. [Default: false]

autoRenewCerts: true

# maxPods is the number of Pods that can run on this Kubelet. [Default: 110]

maxPods: 210

etcd:

type: kubekey

## caFile, certFile and keyFile need not be set, if TLS authentication is not enabled for the existing etcd.

# external:

# endpoints:

# - https://192.168.6.6:2379

# caFile: /pki/etcd/ca.crt

# certFile: /pki/etcd/etcd.crt

# keyFile: /pki/etcd/etcd.key

dataDir: "/var/lib/etcd"

heartbeatInterval: 250

electionTimeout: 5000

snapshotCount: 10000

autoCompactionRetention: 8

metrics: basic

quotaBackendBytes: 2147483648

maxRequestBytes: 1572864

maxSnapshots: 5

maxWals: 5

logLevel: info

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

multusCNI:

enabled: false

storage:

openebs:

basePath: /var/openebs/local # base path of the local PV provisioner

registry:

type: harbor

registryMirrors: []

insecureRegistries: []

privateRegistry: "dockerhub.kubekey.local"

namespaceOverride: "kubesphereio"

auths: # if docker add by `docker login`, if containerd append to `/etc/containerd/config.toml`

"dockerhub.kubekey.local":

username: "admin"

password: Harbor12345

skipTLSVerify: true # Allow contacting registries over HTTPS with failed TLS verification.

plainHTTP: false # Allow contacting registries over HTTP.

certsPath: "/etc/docker/certs.d/dockerhub.kubekey.local" # Use certificates at path (*.crt, *.cert, *.key) to connect to the registry.

addons: [] # You can install cloud-native addons (Chart or YAML) by using this field.

---

apiVersion: installer.kubesphere.io/v1alpha1

kind: ClusterConfiguration

metadata:

name: ks-installer

namespace: kubesphere-system

labels:

version: v3.4.1

spec:

persistence:

storageClass: ""

authentication:

jwtSecret: ""

zone: ""

local_registry: ""

namespace_override: ""

# dev_tag: ""

etcd:

monitoring: true

endpointIps: localhost

port: 2379

tlsEnable: true

common:

core:

console:

enableMultiLogin: true

port: 30880

type: NodePort

# apiserver:

# resources: {}

# controllerManager:

# resources: {}

redis:

enabled: false

volumeSize: 2Gi

openldap:

enabled: false

volumeSize: 2Gi

minio:

volumeSize: 20Gi

monitoring:

# type: external

endpoint: http://prometheus-operated.kubesphere-monitoring-system.svc:9090

GPUMonitoring:

enabled: false

gpu:

kinds:

- resourceName: "nvidia.com/gpu"

resourceType: "GPU"

default: true

es:

# master:

# volumeSize: 4Gi

# replicas: 1

# resources: {}

# data:

# volumeSize: 20Gi

# replicas: 1

# resources: {}

logMaxAge: 7

elkPrefix: logstash

basicAuth:

enabled: false

username: ""

password: ""

externalElasticsearchHost: ""

externalElasticsearchPort: ""

opensearch:

# master:

# volumeSize: 4Gi

# replicas: 1

# resources: {}

# data:

# volumeSize: 20Gi

# replicas: 1

# resources: {}

enabled: true

logMaxAge: 7

opensearchPrefix: whizard

basicAuth:

enabled: true

username: "admin"

password: "admin"

externalOpensearchHost: ""

externalOpensearchPort: ""

dashboard:

enabled: false

alerting:

enabled: true

# thanosruler:

# replicas: 1

# resources: {}

auditing:

enabled: false

# operator:

# resources: {}

# webhook:

# resources: {}

devops:

enabled: false

# resources: {}

jenkinsMemoryLim: 8Gi

jenkinsMemoryReq: 4Gi

jenkinsVolumeSize: 8Gi

events:

enabled: false

# operator:

# resources: {}

# exporter:

# resources: {}

# ruler:

# enabled: true

# replicas: 2

# resources: {}

logging:

enabled: false

logsidecar:

enabled: true

replicas: 2

# resources: {}

metrics_server:

enabled: false

monitoring:

storageClass: ""

node_exporter:

port: 9100

# resources: {}

# kube_rbac_proxy:

# resources: {}

# kube_state_metrics:

# resources: {}

# prometheus:

# replicas: 1

# volumeSize: 20Gi

# resources: {}

# operator:

# resources: {}

# alertmanager:

# replicas: 1

# resources: {}

# notification_manager:

# resources: {}

# operator:

# resources: {}

# proxy:

# resources: {}

gpu:

nvidia_dcgm_exporter:

enabled: false

# resources: {}

multicluster:

clusterRole: none

network:

networkpolicy:

enabled: false

ippool:

type: none

topology:

type: none

openpitrix:

store:

enabled: false

servicemesh:

enabled: false

istio:

components:

ingressGateways:

- name: istio-ingressgateway

enabled: false

cni:

enabled: false

edgeruntime:

enabled: false

kubeedge:

enabled: false

cloudCore:

cloudHub:

advertiseAddress:

- ""

service:

cloudhubNodePort: "30000"

cloudhubQuicNodePort: "30001"

cloudhubHttpsNodePort: "30002"

cloudstreamNodePort: "30003"

tunnelNodePort: "30004"

# resources: {}

# hostNetWork: false

iptables-manager:

enabled: true

mode: "external"

# resources: {}

# edgeService:

# resources: {}

terminal:

timeout: 600

8. 推送镜像

解压 ks3.4.1-images.tar.gz 的镜像压缩包,后执行./load-push.sh 将镜像上传至私有仓库

- 推送脚本

vim ./load-push.sh

#!/bin/bash

#

FILES=$(find . -type f \( -iname "*.tar" -o -iname "*.tar.gz" \) -printf '%P\n' | grep -E ".tar$|.tar.gz$")

Harbor="dockerhub.kubekey.local"

ProjectName="kubesphereio"

docker login -u admin -p Harbor12345 ${Harbor}

echo "--------[Login Harbor succeed]--------"

# 遍历所有 ".tar" 或 ".tar.gz" 文件,逐个加载 Docker 镜像

for file in ${FILES}

do

echo "--------[Loading Docker image from $file]--------"

docker load -i "$file" > loadimages

IMAGE=`cat loadimages | grep 'Loaded image:' | awk '{print $3}' | head -1`

IMAGE2=`cat loadimages | grep 'Loaded image:' | awk '{print $3}' | head -1|awk -F / '{print $3}'`

echo "--------[$IMAGE]--------"

docker tag $IMAGE $Harbor/$ProjectName/$IMAGE2

docker push $Harbor/$ProjectName/$IMAGE2

done

echo "--------[All Docker images push successfully]--------"

./load-push.sh

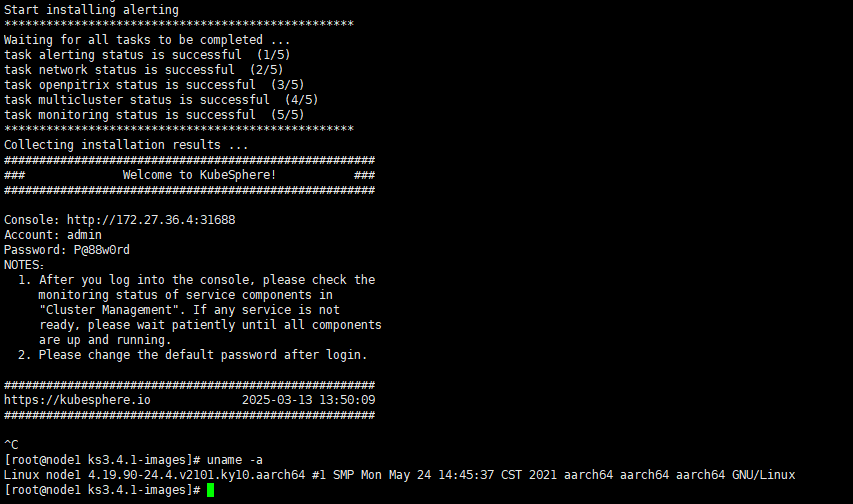

9. 安装 K8s

由于部分离线制品不完整,需要执行两次命令:

- 第一次执行(用于解压制品,可能会报错):

./kk create cluster -f config-sample.yaml -a ks3.4-artifact.tar.gz

- 第二次执行(去掉

-a参数,正式安装):

./kk create cluster -f config-sample.yaml

等待 10~15 分钟,直到出现如下成功信息:

clusterconfiguration.installer.kubesphere.io/ks-installer created

13:43:32 CST success: [node1]

#####################################################

### Welcome to KubeSphere! ###

#####################################################

Console: http://172.27.36.4:31688

Account: admin

Password: P@88w0rd

NOTES:

1. After you log into the console, please check the

monitoring status of service components in

"Cluster Management". If any service is not

ready, please wait patiently until all components

are up and running.

2. Please change the default password after login.

#####################################################

https://kubesphere.io 2025-03-13 13:50:09

#####################################################

13:50:11 CST success: [node1]

13:50:11 CST Pipeline[CreateClusterPipeline] execute successfully

Installation is complete.

Please check the result using the command:

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

期间可以通过kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f 查看安装进度

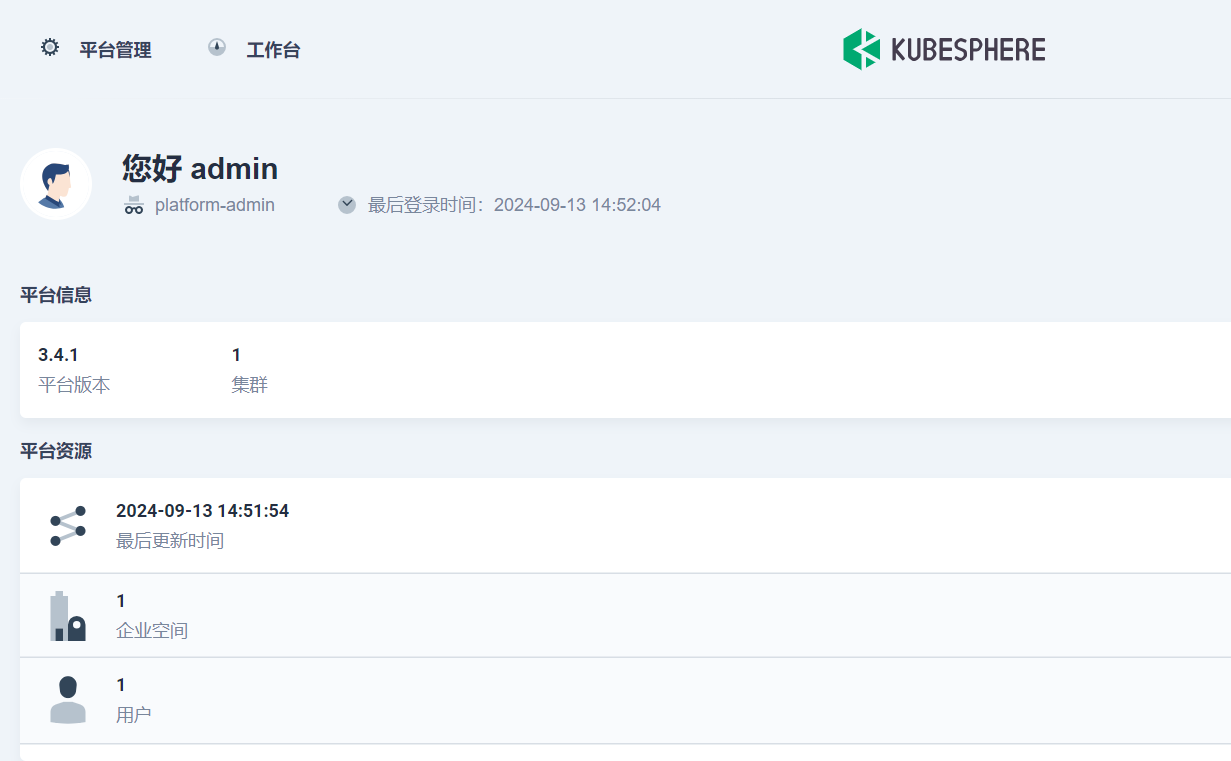

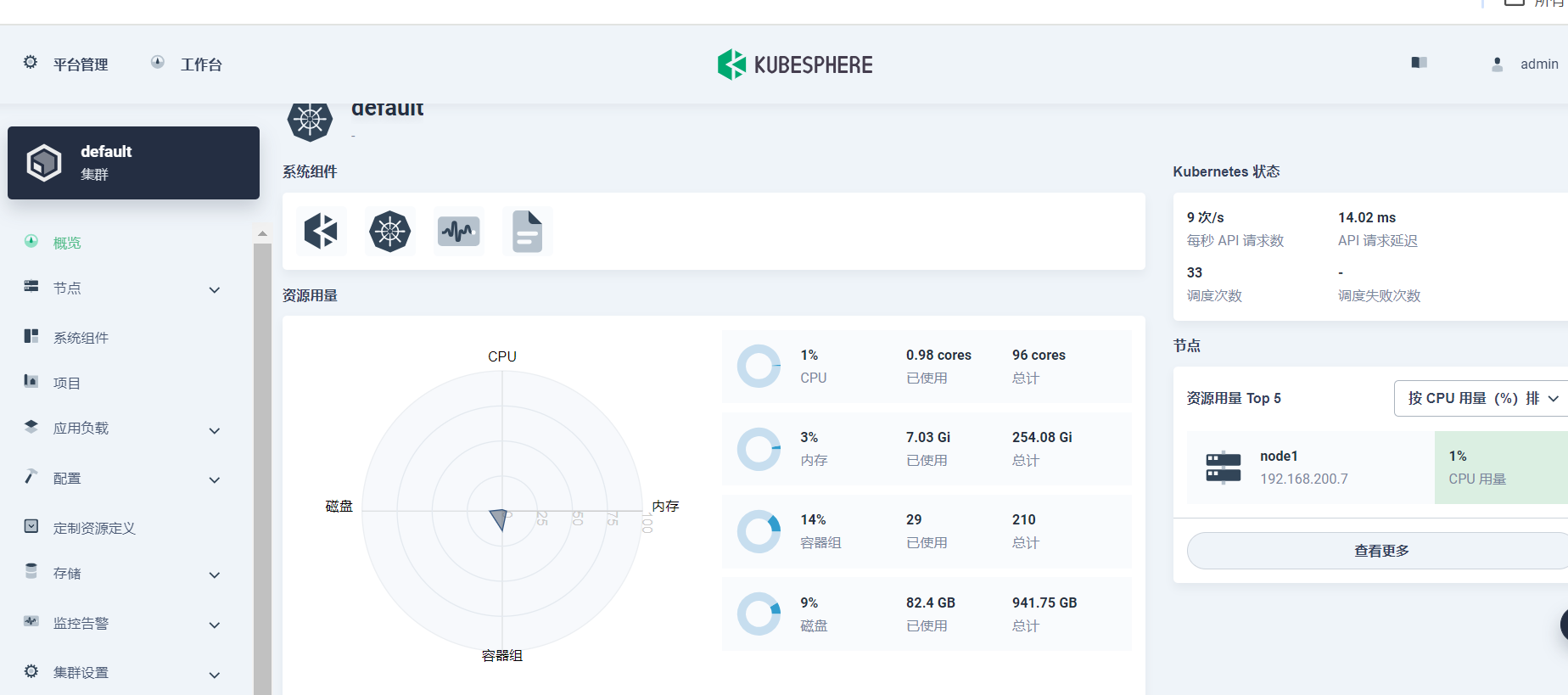

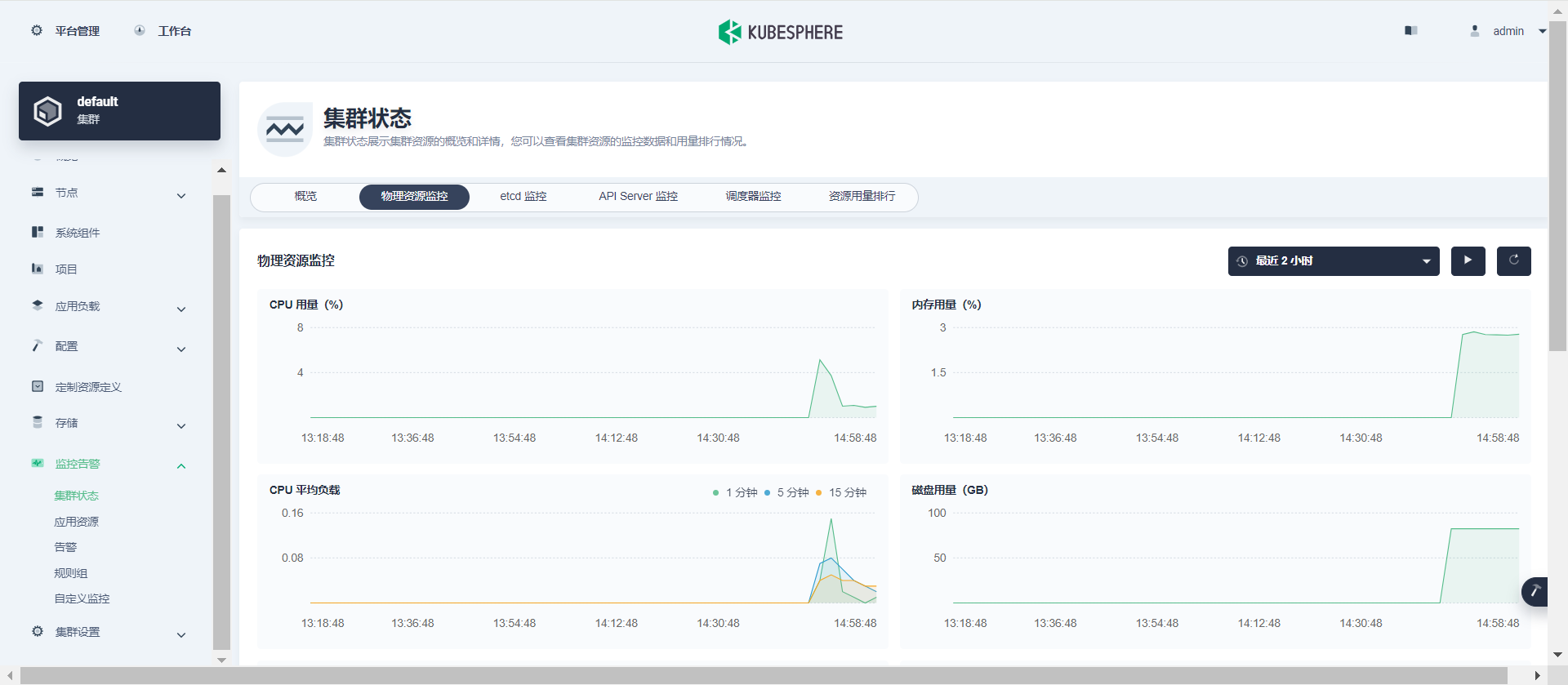

10. 验证

查看安装状态。

基础组件运行正常。

11. 总结

本文详细描述了基于鲲鹏芯片的麒麟 V10 系统,离线部署 K8s 及 KubeSphere 的全过程,适合在无外网环境下快速搭建 K8s 集群及 KubeSphere 平台。

本文由博客一文多发平台 OpenWrite 发布!

共同学习,写下你的评论

评论加载中...

作者其他优质文章