Pytorch实现简单的AlexNet:

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1 = nn.Conv2d(1,6,3) #[1,6,3,3]

self.conv2 = nn.Conv2d(6,16,3)

self.fc1 = nn.Linear(16*28*28,512) #卷积的时候:32->30->28

self.fc2 = nn.Linear(512,64)

self.fc3 = nn.Linear(64,2)

def forward(self,x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = x.view(-1,16*28*28)

x = self.fc1(x)

x = F.relu(x)

x = self.fc2(x)

x = F.relu(x)

x = self.fc3(x)

return x

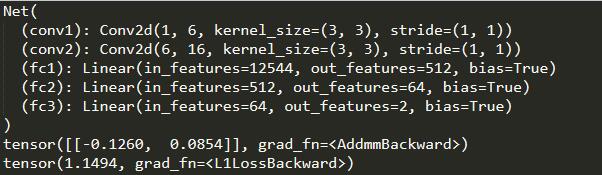

#查看神经网络结构

net = Net()

print(net)

input_data = torch.randn(1,1,32,32)

#运行神经网络

out = net(input_data)

print(out)

#随机生成真实值

target = torch.randn(2)

target = target.view(1,-1)

#计算预测值与真实值的误差,基于L1

criterion = nn.L1Loss()

loss = criterion(out,target)

print(loss)

#误差反向传递

net.zero_grad() #先清零梯度

loss.backward() #反向传递,自动计算梯度

#权重更新

optimizer = optim.SGD(net.parameters(),lr = 0.01)

optimizer.step()

运行结果

Pytorch实现卷积神经网络图像分类器:

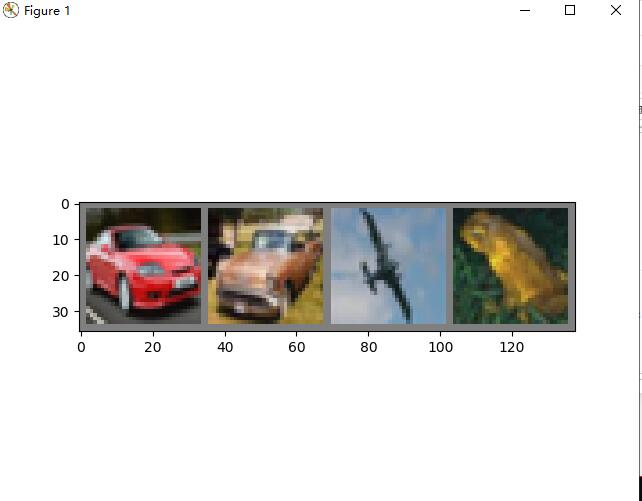

Demo1:加载并显示数据:

import torch

import torchvision

import torchvision.transforms as transforms

transform01 = transforms.Compose(

[

transforms.ToTensor(),

transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))

]

)

#训练数据集

trainset = torchvision.datasets.CIFAR10(root = './data',train = True,download = True,transform = transform01)

trainloader = torch.utils.data.DataLoader(trainset,batch_size = 4,shuffle = True,num_workers = 2)

#测试数据集

testset = torchvision.datasets.CIFAR10(root = './data',train = False,download = True,transform = transform01)

testloader = torch.utils.data.DataLoader(testset,batch_size = 4,shuffle = False,num_workers = 2)

#显示图片

import matplotlib.pyplot as plt

import numpy as np

def imshow(img):

#torch.tensor[c,h,w]

img = img / 2 + 0.5

npimg = img.numpy()

npimg = np.transpose(npimg,(1,2,0)) #[c,h,w] --> [h,w,c]

plt.imshow(npimg)

plt.show()

if __name__ == '__main__':

torch.multiprocessing.set_start_method('spawn')

#随机加载一个mini batch

dataIter = iter(trainloader)

images,labels = dataIter.next()

imshow(torchvision.utils.make_grid(images))

运行结果:

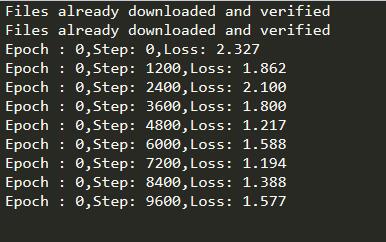

Demo2:训练代码

import torch

import torchvision

import torchvision.transforms as transforms

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

transform01 = transforms.Compose(

[

transforms.ToTensor(),

transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))

]

)

#训练数据集

trainset = torchvision.datasets.CIFAR10(root = './data',train = True,download = True,transform = transform01)

trainloader = torch.utils.data.DataLoader(trainset,batch_size = 4,shuffle = True,num_workers = 2)

#测试数据集

testset = torchvision.datasets.CIFAR10(root = './data',train = False,download = True,transform = transform01)

testloader = torch.utils.data.DataLoader(testset,batch_size = 4,shuffle = False,num_workers = 2)

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1 = nn.Conv2d(3,6,3)

self.conv2 = nn.Conv2d(6,16,3)

self.fc1 = nn.Linear(16*28*28,512)

self.fc2 = nn.Linear(512,64)

self.fc3 = nn.Linear(64,10)

def forward(self,x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = x.view(-1,16*28*28)

x = self.fc1(x)

x = F.relu(x)

x = self.fc2(x)

x = F.relu(x)

x = self.fc3(x)

return x

net = Net()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(),lr = 0.001,momentum = 0.9)

if __name__ == '__main__':

torch.multiprocessing.set_start_method('spawn')

for epoch in range(5):

for i,data in enumerate(trainloader):

images,labels = data

outputs = net(images)

loss = criterion(outputs,labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if (i % 1200 == 0):

print('Epoch : %d,Step: %d,Loss: %.3f' %(epoch,i,loss.item()))

运行结果:

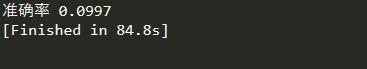

Demo3:测试准确率

import torch

import torchvision

import torchvision.transforms as transforms

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

transform01 = transforms.Compose(

[

transforms.ToTensor(),

transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))

]

)

#训练数据集

trainset = torchvision.datasets.CIFAR10(root = './data',train = True,download = True,transform = transform01)

trainloader = torch.utils.data.DataLoader(trainset,batch_size = 4,shuffle = True,num_workers = 2)

#测试数据集

testset = torchvision.datasets.CIFAR10(root = './data',train = False,download = True,transform = transform01)

testloader = torch.utils.data.DataLoader(testset,batch_size = 4,shuffle = False,num_workers = 2)

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1 = nn.Conv2d(3,6,3)

self.conv2 = nn.Conv2d(6,16,3)

self.fc1 = nn.Linear(16*28*28,512)

self.fc2 = nn.Linear(512,64)

self.fc3 = nn.Linear(64,10)

def forward(self,x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = x.view(-1,16*28*28)

x = self.fc1(x)

x = F.relu(x)

x = self.fc2(x)

x = F.relu(x)

x = self.fc3(x)

return x

net = Net()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(),lr = 0.001,momentum = 0.9)

if __name__ == '__main__':

torch.multiprocessing.set_start_method('spawn')

#测试模型

correct = 0.0

total = 0.0

with torch.no_grad():

for data in testloader:

images,labels = data

outputs = net(images)

_,predicted = torch.max(outputs.data,1)

correct += (predicted == labels).sum()

total += labels.size(0)

print('准确率',float(correct)/total)

运行结果:

比较低了。。

完整代码:

import torch

import torchvision

import torchvision.transforms as transforms

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

transform01 = transforms.Compose(

[

transforms.ToTensor(),

transforms.Normalize((0.5,0.5,0.5),(0.5,0.5,0.5))

]

)

#训练数据集

trainset = torchvision.datasets.CIFAR10(root = './data',train = True,download = True,transform = transform01)

trainloader = torch.utils.data.DataLoader(trainset,batch_size = 4,shuffle = True,num_workers = 2)

#测试数据集

testset = torchvision.datasets.CIFAR10(root = './data',train = False,download = True,transform = transform01)

testloader = torch.utils.data.DataLoader(testset,batch_size = 4,shuffle = False,num_workers = 2)

class Net(nn.Module):

def __init__(self):

super(Net,self).__init__()

self.conv1 = nn.Conv2d(3,6,3)

self.conv2 = nn.Conv2d(6,16,3)

self.fc1 = nn.Linear(16*28*28,512)

self.fc2 = nn.Linear(512,64)

self.fc3 = nn.Linear(64,10)

def forward(self,x):

x = self.conv1(x)

x = F.relu(x)

x = self.conv2(x)

x = F.relu(x)

x = x.view(-1,16*28*28)

x = self.fc1(x)

x = F.relu(x)

x = self.fc2(x)

x = F.relu(x)

x = self.fc3(x)

return x

net = Net()

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(),lr = 0.001,momentum = 0.9)

if __name__ == '__main__':

#训练模型

for epoch in range(5):

for i,data in enumerate(trainloader):

images,labels = data

outputs = net(images)

loss = criterion(outputs,labels)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if (i % 2400 == 0):

print('Epoch : %d,Step: %d,Loss: %.3f' %(epoch,i,loss.item()))

#保存模型

torch.save(net.state_dict(),'./model.pt')

#读取模型

net_2 = Net()

net_2.load_state_dict(torch.load('./model.pt'))

#测试准确率

correct = 0.0

total = 0.0

with torch.no_grad():

for data in testloader:

images,labels = data

outputs = net_2(images)

_,predicted = torch.max(outputs.data,1)

correct += (predicted == labels).sum()

total += labels.size(0)

print('准确率',float(correct)/total)

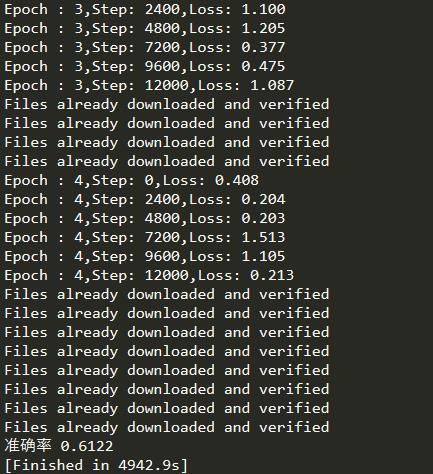

运行结果:

点击查看更多内容

为 TA 点赞

评论

共同学习,写下你的评论

评论加载中...

作者其他优质文章

正在加载中

感谢您的支持,我会继续努力的~

扫码打赏,你说多少就多少

赞赏金额会直接到老师账户

支付方式

打开微信扫一扫,即可进行扫码打赏哦