本节我们介绍如何使用 OpenCV + TensorFlow 识别摄像头看到的内容

前言

上节课我们成功安装了 TensorFlow 并且运行了官方的例子,对官方的图片成功进行了识别,那么我们能不能使用自己的图像输入源来跟TensorFlow配合呢?答案是可以的,这节课我们就来一起看看如何使用本地的摄像头和TensorFlow来进行配合。

如果还没有看过上篇手记的同学,可以戳下面的链接来学习一下如何搭建TensorFlow的开发环境吧~

安装OpenCV

首先我们想要操作摄像头,就需要安装OpenCV,由于我们是在Windows系统上,所以安装OpenCV要使用别人编译过的whl文件来安装。去下面的地址,选择适合你的whl文件版本:

https://www.lfd.uci.edu/~gohlke/pythonlibs/#opencv

我下载的是 opencv_python‑3.4.1+contrib‑cp36‑cp36m‑win_amd64.whl 其中 cp36 表示 cpython3.6 的版本,win 表示 windows系统,amd64 表示64位。同理,如果你是python其他版本就选择对应的cp和系统位数。建议跟本教程一样的环境,避免不必要的麻烦。

下载完成以后,进入文件存放的目录输入命令:

pip install opencv_python‑3.4.1+contrib‑cp36‑cp36m‑win_amd64.whl

即可完成安装,如果你没有安装anaconda可能会有一些包安装失败,因为OpenCV需要很多依赖包。

测试安装

在python交互界面输入以下命令,打印出3.4.1表示安装成功,代表我们安装的是opencv的3.4.1版本

import cv2

cv2.__version__有些同学可能从来没有接触过OpenCV,这里就对OpenCV做一个简单的小例子:

import numpy as np

import cv2

# 打开本地摄像头,括号内表示设备编号,第一个设备为0,如果电脑有两个摄像头,第二个摄像头就是1

cap = cv2.VideoCapture(0)

while(True):

# 从摄像头中读取画面,while表示循环读取画面,也就是一张一张图片形成了一个视频

ret, image = cap.read()

# 设置每一张图片的颜色

img_color = cv2.cvtColor(image, 0)

# 显示窗口

cv2.imshow('window', img_color)

# 如果按下键盘上的 Q 就关闭窗口

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# 释放资源

cap.release()

# 关闭窗口

cv2.destroyAllWindows()由此案例我们就可以发现,cap.read() 就是读取一帧摄像头的画面,当我们把它放在一个死循环中,它就会不断的读取每一帧的画面,并且会返回两个值给我们,第二个参数就是当前帧的数据。由此我们就能够把TensorFlow中的图片替换成我们 cap.read 读取到的 image。

简单修改一下TensorFlow官方的案例代码:

import numpy as np

import os

import six.moves.urllib as urllib

import sys

import tarfile

import tensorflow as tf

import zipfile

from collections import defaultdict

from io import StringIO

from matplotlib import pyplot as plt

from PIL import Image

import cv2

cap = cv2.VideoCapture(0)

# This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

# ## Object detection imports

# Here are the imports from the object detection module.

# In[3]:

from utils import label_map_util

from utils import visualization_utils as vis_util

# # Model preparation

# ## Variables

#

# Any model exported using the `export_inference_graph.py` tool can be loaded here simply by changing `PATH_TO_CKPT` to point to a new .pb file.

#

# By default we use an "SSD with Mobilenet" model here. See the [detection model zoo](https://github.com/tensorflow/models/blob/master/object_detection/g3doc/detection_model_zoo.md) for a list of other models that can be run out-of-the-box with varying speeds and accuracies.

# In[4]:

# What model to download.

MODEL_NAME = 'ssd_mobilenet_v1_coco_11_06_2017'

MODEL_FILE = MODEL_NAME + '.tar.gz'

DOWNLOAD_BASE = 'http://download.tensorflow.org/models/object_detection/'

# Path to frozen detection graph. This is the actual model that is used for the object detection.

PATH_TO_CKPT = MODEL_NAME + '/frozen_inference_graph.pb'

# List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = os.path.join('data', 'mscoco_label_map.pbtxt')

NUM_CLASSES = 90

# ## Download Model

# In[5]:

opener = urllib.request.URLopener()

opener.retrieve(DOWNLOAD_BASE + MODEL_FILE, MODEL_FILE)

tar_file = tarfile.open(MODEL_FILE)

for file in tar_file.getmembers():

file_name = os.path.basename(file.name)

if 'frozen_inference_graph.pb' in file_name:

tar_file.extract(file, os.getcwd())

# ## Load a (frozen) Tensorflow model into memory.

# In[6]:

detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

# ## Loading label map

# Label maps map indices to category names, so that when our convolution network predicts `5`, we know that this corresponds to `airplane`. Here we use internal utility functions, but anything that returns a dictionary mapping integers to appropriate string labels would be fine

# In[7]:

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=NUM_CLASSES, use_display_name=True)

category_index = label_map_util.create_category_index(categories)

# ## Helper code

# In[8]:

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

# # Detection

# In[9]:

# For the sake of simplicity we will use only 2 images:

# image1.jpg

# image2.jpg

# If you want to test the code with your images, just add path to the images to the TEST_IMAGE_PATHS.

PATH_TO_TEST_IMAGES_DIR = 'test_images'

TEST_IMAGE_PATHS = [ os.path.join(PATH_TO_TEST_IMAGES_DIR, 'image{}.jpg'.format(i)) for i in range(1, 3) ]

# Size, in inches, of the output images.

IMAGE_SIZE = (12, 8)

# In[10]:

with detection_graph.as_default():

with tf.Session(graph=detection_graph) as sess:

while True:

ret, image_np = cap.read()

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0')

# Each box represents a part of the image where a particular object was detected.

boxes = detection_graph.get_tensor_by_name('detection_boxes:0')

# Each score represent how level of confidence for each of the objects.

# Score is shown on the result image, together with the class label.

scores = detection_graph.get_tensor_by_name('detection_scores:0')

classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0')

# Actual detection.

(boxes, scores, classes, num_detections) = sess.run(

[boxes, scores, classes, num_detections],

feed_dict={image_tensor: image_np_expanded})

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

np.squeeze(boxes),

np.squeeze(classes).astype(np.int32),

np.squeeze(scores),

category_index,

use_normalized_coordinates=True,

line_thickness=8)

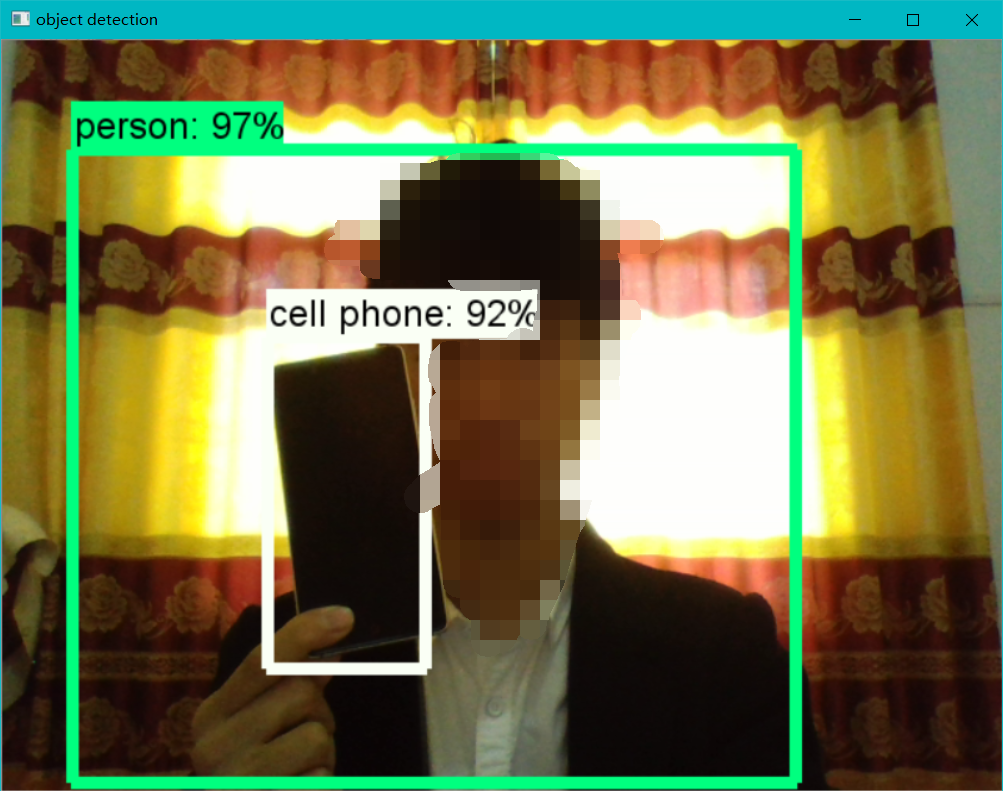

cv2.imshow('object detection', cv2.resize(image_np, (800,600)))

if cv2.waitKey(25) & 0xFF == ord('q'):

cv2.destroyAllWindows()

break小提示:大家可以直接复制代码,保存为py文件。也可以尝试自己分析官方示例代码,然后尝试去修改一下~

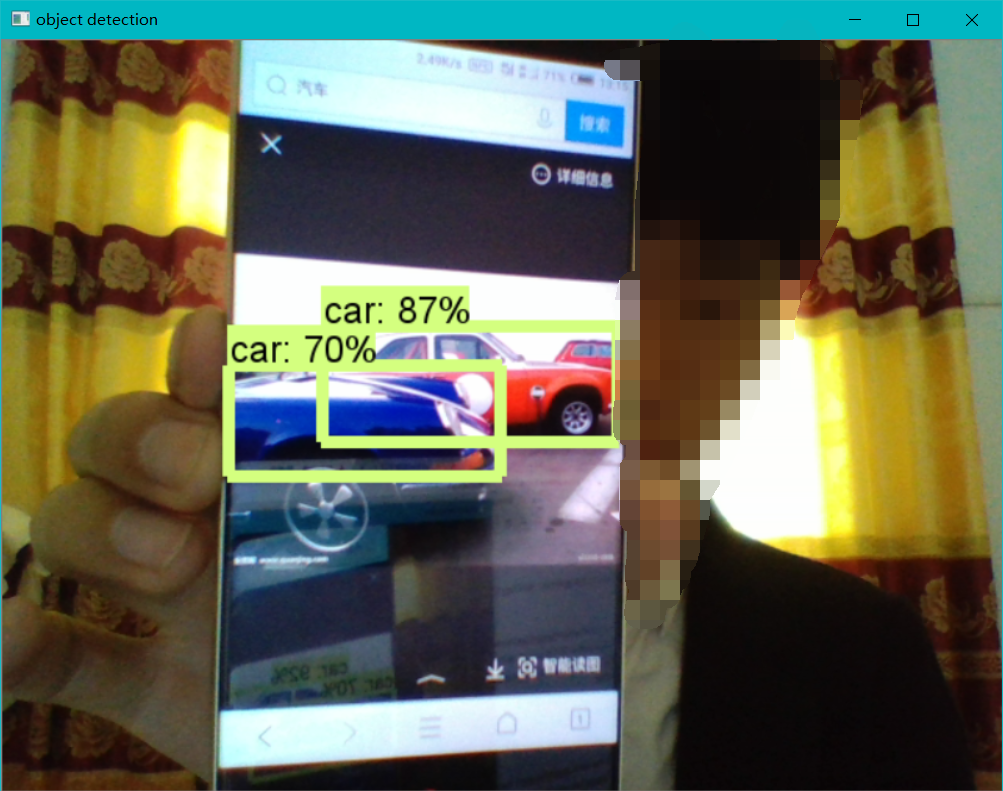

文件保存在:D:\code\python\TensorFlow\models\research\object_detection 目录下,然后通过命令行进入当前目录,输入 python 文件名.py 回车就可以运行了。快来试试TensorFlow能识别出你摄像头看到的哪些图像吧~~~它可不仅仅只是能识别人这么简单,汽车,水杯,手机,外套,沙发,等等等等,都能识别,赶紧试试吧。

运行的时候可能会报一个警告:

UserWarning: This call to matplotlib.use() has no effect

because the backend has already been chosen;

matplotlib.use() must be called *before* pylab, matplotlib.pyplot,

or matplotlib.backends is imported for the first time.

warnings.warn(_use_error_msg)大家不需要在意这个警告,只要随后能正常输出日志信息并且打开一个窗口,显示摄像头的画面就是正常的。

希望大家不要介意给自己打了码,实在是太丑了,不想影响大家学习的心情~~~

第二张图也识别到我是个人了,截图的时候正好Person那个框闪没了,没有截到,感兴趣的可以拿出你们的毕业照试试,会让你惊叹TensorFlow的牛逼之处的。

共同学习,写下你的评论

评论加载中...

作者其他优质文章