在实际工作中,会经常碰到所给的服务器硬盘容量太小,而实际的应用软件中却需要一个容量较大的分区进行数据存储等,除了通过硬件RAID卡来实现合并多硬盘外,其实我们也可以通过软件的方式来实现。

实验环境

虚拟机:CentOS 6.6 x64

硬盘1:/dev/sdb

硬盘2:/dev/sdc

硬盘3:/dev/sdd

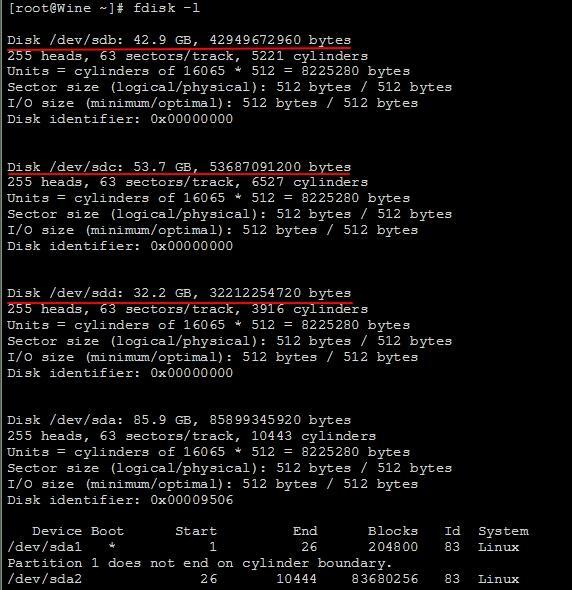

详细硬盘列表信息如下所示:

使用LVM合并硬盘

使用LVM(Logical Volume Manager)目的如下所示:

将多块独立的硬盘合并为逻辑上的一块,并挂载到指定的挂载点中,达到在一个目录中使用多块硬盘所有空间的效果

LVM相关概念

PV(Physical Volume):物理卷

硬盘分区后但还未格式化为文件系统,可使用pvcreate命令将分区创建为PV,其对应的system ID为8e即LVM格式所对应的系统标识符。

VG(Volume Group):卷组

将多个PV组合起来,使用vgcreate创建卷组,这样卷组就可以包含多个PV,相当于重新组合多个分区后所得到的磁盘。虽然VG是组合了多个PV,但创建VG时会将VG所有的空间根据指定的PE大小划分为多个PE,在LVM模式中存储都是以PE为单元,类似于文件系统中的BLOCK。

PE(Physical Extent):物理块

PE是VG的存储单元,实际的数据都是存储在PE中

LV(Logical Volume):逻辑卷

VG相当于组合的多个硬盘,则LV相当于分区,只不过该分区是通过VG进行划分的。VG中存在很多PE,可以指定将多少个PE划分给一个LV,也可以直接指定大小来划分。划分为LV后就相当于划分了分区,仅需要对LV进行格式化文件系统即可。

LE(Logical Extent):逻辑块

PE是物理存储单元,而LE则是逻辑存储单元,即LE为LV中的逻辑存储单元,与PE大小一致。从VG中划分LV,实际上就是从VG中划分PE,而划分LV后称之为LE,而不是PE了。

LVM之所以能够伸缩容量,其实现方法就是将LV中的PE进行删除或增加

LVM的存储机制

LV是从VG中划分出来的,因此LV中的PE可能来自于多个PV。因此向LV存储数据时,主要有两种机制:

[ ] 线性模式(Linear):先将数据存储在属于同一个PV的PE,然后再向下一个PV中的PE

[ ] 条带模式(Striped):将一份数据拆分为多份,分别写入该LV对应的每个PV中,类似于RAID 0,因此读写性能会优于线性模式。

尽管条带模式读写性能会比较好,但LVM的重点是扩展容量而非性能,如果要实现读写性能还是推荐采用RAID方式实现。

LVM示意图

创建LVM操作步骤

1、创建PV(Physical Volume)

[root@Wine ~]# pvcreate /dev/sdb /dev/sdc /dev/sdd Physical volume "/dev/sdb" successfully created Physical volume "/dev/sdc" successfully created Physical volume "/dev/sdd" successfully created

2、查看创建的PV列表

[root@Wine ~]# pvs # 查看列表 PV VG Fmt Attr PSize PFree /dev/sdb lvm2 --- 40.00g 40.00g /dev/sdc lvm2 --- 50.00g 50.00g /dev/sdd lvm2 --- 30.00g 30.00g或[root@Wine ~]# pvscan PV /dev/sdb lvm2 [40.00 GiB] PV /dev/sdc lvm2 [50.00 GiB] PV /dev/sdd lvm2 [30.00 GiB] Total: 3 [120.00 GiB] / in use: 0 [0 ] / in no VG: 3 [120.00 GiB][root@Wine ~]# pvdisplay # 查看PV详细信息 "/dev/sdb" is a new physical volume of "40.00 GiB" --- NEW Physical volume --- PV Name /dev/sdb VG Name PV Size 40.00 GiB Allocatable NO PE Size 0 Total PE 0 Free PE 0 Allocated PE 0 PV UUID 9vAxyC-FsAc-S2HA-aCze-MZe5-em24-7th27s "/dev/sdc" is a new physical volume of "50.00 GiB" --- NEW Physical volume --- PV Name /dev/sdc VG Name PV Size 50.00 GiB Allocatable NO PE Size 0 Total PE 0 Free PE 0 Allocated PE 0 PV UUID HdbCuK-hFkP-QQbr-Naaa-PNzz-WFNw-78uXs3 "/dev/sdd" is a new physical volume of "30.00 GiB" --- NEW Physical volume --- PV Name /dev/sdd VG Name PV Size 30.00 GiB Allocatable NO PE Size 0 Total PE 0 Free PE 0 Allocated PE 0 PV UUID EpPdAf-ku4b-zIMm-V2Np-gnuC-59nj-L0Zd9G

3、创建VG(Volume Group)

创建的VG的使用方法如下

vgcreate [自定义LVM名称] [设备]

[root@Wine ~]# vgcreate TestLVM /dev/sdb # 创建主要卷组 Volume group "TestLVM" successfully created[root@Wine ~]# vgdisplay # 显示卷组详细信息 --- Volume group --- VG Name TestLVM System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 1 VG Access read/write VG Status resizable MAX LV 0 Cur LV 0 Open LV 0 Max PV 0 Cur PV 1 Act PV 1 VG Size 40.00 GiB PE Size 4.00 MiB Total PE 10239 Alloc PE / Size 0 / 0 Free PE / Size 10239 / 40.00 GiB VG UUID s0gVkf-FScU-7x9v-HIx3-cinR-Sc60-IHgKmn

4、向VG中添加PV(Volume Group)

向VG中添加PV的使用方法如下

vgextend [自定义LVM名称] [设备]

[root@Wine ~]# vgextend TestLVM /dev/sdc /dev/sdd # 创建扩展卷组,并使其合并到一个卷组中 Volume group "TestLVM" successfully extended # 查看扩展卷组[root@Wine ~]# vgdisplay --- Volume group --- VG Name TestLVM System ID Format lvm2 Metadata Areas 3 Metadata Sequence No 2 VG Access read/write VG Status resizable MAX LV 0 Cur LV 0 Open LV 0 Max PV 0 Cur PV 3 Act PV 3 VG Size 119.99 GiB # 注意这里与前面的区别 PE Size 4.00 MiB Total PE 30717 Alloc PE / Size 0 / 0 Free PE / Size 30717 / 119.99 GiB VG UUID s0gVkf-FScU-7x9v-HIx3-cinR-Sc60-IHgKmn

5、创建LV(Logical Volume)

创建的LV的使用方法如下

lvcreate -L[自定义分区大小] -n[自定义分区名称] [VG名称]

或

lvcreate -l[%{ VG | FREE | ORIGIN }] -n[自定义分区名称] [VG名称]

[root@Wine ~]# lvcreate -l 100%VG -nTestData TestLVM # 创建LV Logical volume "TestData" created[root@Wine ~]# lvscan # 查看创建的LV列表 ACTIVE '/dev/TestLVM/TestData' [119.99 GiB] inherit[root@Wine ~]# lvdisplay # 查看创建的LV详细信息 --- Logical volume --- LV Path /dev/TestLVM/TestData LV Name TestData VG Name TestLVM LV UUID 2zvNe9-dtlv-pcWc-oTnJ-6INz-e2dI-vRQ7Vq LV Write Access read/write LV Creation host, time Wine, 2018-11-14 11:01:56 +0800 LV Status available # open 0 LV Size 119.99 GiB Current LE 30717 Segments 3 Allocation inherit Read ahead sectors auto - currently set to 256 Block device 253:0

6、格式化分区

[root@Wine ~]# mkfs -t ext4 /dev/TestLVM/TestData # 格式化分区 mke2fs 1.41.12 (17-May-2010) 文件系统标签= 操作系统:Linux 块大小=4096 (log=2) 分块大小=4096 (log=2) Stride=0 blocks, Stripe width=0 blocks7864320 inodes, 31454208 blocks1572710 blocks (5.00%) reserved for the super user 第一个数据块=0Maximum filesystem blocks=4294967296960 block groups32768 blocks per group, 32768 fragments per group8192 inodes per groupSuperblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208, 4096000, 7962624, 11239424, 20480000, 23887872正在写入inode表: 完成 Creating journal (32768 blocks): 完成 Writing superblocks and filesystem accounting information: 完成 This filesystem will be automatically checked every 29 mounts or180 days, whichever comes first. Use tune2fs -c or -i to override.

7、创建挂载点并进行挂载

[root@Wine ~]# mkdir /LVM[root@Wine ~]# mount /dev/TestLVM/TestData /LVM/[root@Wine ~]# df -ThFilesystem Type Size Used Avail Use% Mounted on/dev/sda2 ext4 79G 9.6G 65G 13% /tmpfs tmpfs 7.8G 72K 7.8G 1% /dev/shm /dev/sda1 ext4 190M 32M 149M 18% /boot /dev/mapper/TestLVM-TestData ext4 118G 60M 112G 1% /LVM # 创建的LVM挂载点

8、添加开机自动挂载

[root@Wine ~]# echo '/dev/TestLVM/TestData /LVM ext4 defaults 0 0 ' >> /etc/fstab

删除LVM操作步骤

1、在备份LVM数据后,先卸载挂载点并删除/etc/fstab中的挂载记录

[root@Wine ~]# umount /LVM/;df -h Filesystem Size Used Avail Use% Mounted on/dev/sda2 79G 9.6G 65G 13% /tmpfs 7.8G 72K 7.8G 1% /dev/shm/dev/sda1 190M 32M 149M 18% /boot

2、删除LV

[root@Wine ~]# lvremove /dev/TestLVM/TestDataDo you really want to remove active logical volume TestData? [y/n]: y Logical volume "TestData" successfully removed

3、删除VG

[root@Wine ~]# vgremove TestLVM Volume group "TestLVM" successfully removed

4、删除PV

[root@Wine ~]# pvremove /dev/sdb /dev/sdc /dev/sdd Labels on physical volume "/dev/sdb" successfully wiped Labels on physical volume "/dev/sdc" successfully wiped Labels on physical volume "/dev/sdd" successfully wiped

使用软RAID

创建软RAID

RAID通过分为两种:

硬件RAID:通过RAID卡连接多个硬盘或服务器主板集成RAID控制器从而实现RAID相关功能

软件RAID:通过软件层面来模拟实现RAID的相关功能,从而达到与硬件RAID相同的功能

在Linux中通常是使用md模块来实现软件RAID

1、确认操作系统是否安装mdadm包

[root@Wine ~]# rpm -q mdadmmdadm-3.3-6.el6.x86_64

2、对进行需要创建软件的硬盘进行分区并设置分区类型为RAID

[root@Wine ~]# lsblk # 显示硬盘和分区信息

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sr0 11:0 1 1024M 0 rom

sdb 8:16 0 40G 0 disk

sdd 8:48 0 30G 0 disk

sdc 8:32 0 50G 0 disk

sda 8:0 0 80G 0 disk

├─sda1 8:1 0 200M 0 part /boot

└─sda2 8:2 0 79.8G 0 part /

# 创建分区

[root@Wine ~]# fdisk /dev/sdb

Device contains neither a valid DOS partition table, nor Sun, SGI or OSF disklabel

Building a new DOS disklabel with disk identifier 0x7bfec905.

Changes will remain in memory only, until you decide to write them.

After that, of course, the previous content won't be recoverable.

Warning: invalid flag 0x0000 of partition table 4 will be corrected by w(rite)

WARNING: DOS-compatible mode is deprecated. It's strongly recommended to

switch off the mode (command 'c') and change display units to

sectors (command 'u').

Command (m for help): n # 添加新分区

Command action

e extended

p primary partition (1-4)

p # 选择分区类型

Partition number (1-4): 1 # 设置分区号

First cylinder (1-5221, default 1):

Using default value 1Last cylinder, +cylinders or +size{K,M,G} (1-5221, default 5221):

Using default value 5221Command (m for help): l # 打印支持的分区格式类型 0 Empty 24 NEC DOS 81 Minix / old Lin bf Solaris

1 FAT12 39 Plan 9 82 Linux swap / So c1 DRDOS/sec (FAT- 2 XENIX root 3c PartitionMagic 83 Linux c4 DRDOS/sec (FAT- 3 XENIX usr 40 Venix 80286 84 OS/2 hidden C: c6 DRDOS/sec (FAT- 4 FAT16 <32M 41 PPC PReP Boot 85 Linux extended c7 Syrinx

5 Extended 42 SFS 86 NTFS volume set da Non-FS data

6 FAT16 4d QNX4.x 87 NTFS volume set db CP/M / CTOS / . 7 HPFS/NTFS 4e QNX4.x 2nd part 88 Linux plaintext de Dell Utility

8 AIX 4f QNX4.x 3rd part 8e Linux LVM df BootIt

9 AIX bootable 50 OnTrack DM 93 Amoeba e1 DOS access

a OS/2 Boot Manag 51 OnTrack DM6 Aux 94 Amoeba BBT e3 DOS R/O

b W95 FAT32 52 CP/M 9f BSD/OS e4 SpeedStor

c W95 FAT32 (LBA) 53 OnTrack DM6 Aux a0 IBM Thinkpad hi eb BeOS fs

e W95 FAT16 (LBA) 54 OnTrackDM6 a5 FreeBSD ee GPT

f W95 Ext'd (LBA) 55 EZ-Drive a6 OpenBSD ef EFI (FAT-12/16/10 OPUS 56 Golden Bow a7 NeXTSTEP f0 Linux/PA-RISC b11 Hidden FAT12 5c Priam Edisk a8 Darwin UFS f1 SpeedStor

12 Compaq diagnost 61 SpeedStor a9 NetBSD f4 SpeedStor

14 Hidden FAT16 <3 63 GNU HURD or Sys ab Darwin boot f2 DOS secondary

16 Hidden FAT16 64 Novell Netware af HFS / HFS+ fb VMware VMFS

17 Hidden HPFS/NTF 65 Novell Netware b7 BSDI fs fc VMware VMKCORE

18 AST SmartSleep 70 DiskSecure Mult b8 BSDI swap fd Linux raid auto1b Hidden W95 FAT3 75 PC/IX bb Boot Wizard hid fe LANstep

1c Hidden W95 FAT3 80 Old Minix be Solaris boot ff BBT

1e Hidden W95 FAT1

Command (m for help): t # 更改分区类型

Selected partition 1Hex code (type L to list codes): fd # 设置分区类型为RAIDChanged system type of partition 1 to fd (Linux raid autodetect)

Command (m for help): p # 打印信息

Disk /dev/sdb: 42.9 GB, 42949672960 bytes255 heads, 63 sectors/track, 5221 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk identifier: 0x7bfec905

Device Boot Start End Blocks Id System

/dev/sdb1 1 5221 41937651 fd Linux raid autodetect

Command (m for help): w # 保存分区信息

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.fdisk命令只适合小于2T的硬盘,如大于2T则需要使用parted。

使用parted命令创建RAID的如下所示:

[root@Wine ~]# parted /dev/sdc GNU Parted 2.1使用 /dev/sdc Welcome to GNU Parted! Type 'help' to view a list of commands. (parted) mklabel gpt 警告: The existing disk label on /dev/sdc will be destroyed and all data on this disk will be lost. Do you want to continue? 是/Yes/否/No? y (parted) mkpart primary 1 -1(parted) set 1 raid # 关键步骤在这里 新状态? [开]/on/关/off? on(parted) print Model: VMware, VMware Virtual S (scsi) Disk /dev/sdc: 53.7GB Sector size (logical/physical): 512B/512B Partition Table: gpt Number Start End Size File system Name 标志 1 1049kB 53.7GB 53.7GB primary raid

3、使用mdadm创建RAID

[root@Wine ~]# mdadm --create /dev/md0 --auto yes --level 0 -n3 /dev/sd{b,c,d}1 mdadm: Defaulting to version 1.2 metadatamdadm: array /dev/md0 started.[root@Wine ~]# lsblkNAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTsr0 11:0 1 1024M 0 rom sdb 8:16 0 40G 0 disk └─sdb1 8:17 0 40G 0 part

└─md0 9:0 0 119.9G 0 raid0 sdd 8:48 0 30G 0 disk └─sdd1 8:49 0 30G 0 part

└─md0 9:0 0 119.9G 0 raid0 sdc 8:32 0 50G 0 disk └─sdc1 8:33 0 50G 0 part

└─md0 9:0 0 119.9G 0 raid0 sda 8:0 0 80G 0 disk ├─sda1 8:1 0 200M 0 part /boot└─sda2 8:2 0 79.8G 0 part /该命令中各参数说明:

-C/--create:新建阵列

-a/--auto:允许mdadm创建设备文件,一般常用参数-a yes一次性创建

-l/--levle:RAID模式,支持RAID0/1/4/5/6/10等

-n/--raid-devices=:创建阵列中活动磁盘的数量

/dev/md0:阵列的设备名称

/dev/sd{b,c,d}1:创建阵列中的物理磁盘分区信息

更多mdadm帮助,可使用mdadm -h 或 man mdadm

创建完成后,查看阵列状态:

[root@Wine ~]# cat /proc/mdstat Personalities : [raid0] md0 : active raid0 sdd1[2] sdc1[1] sdb1[0] 125722624 blocks super 1.2 512k chunks unused devices: <none> 或使用 [root@Wine ~]# mdadm -D /dev/md0 # 查看软件RAID信息 /dev/md0: Version : 1.2 Creation Time : Wed Nov 14 14:36:11 2018 Raid Level : raid0 Array Size : 125722624 (119.90 GiB 128.74 GB) Raid Devices : 3 Total Devices : 3 Persistence : Superblock is persistent Update Time : Wed Nov 14 14:36:11 2018 State : clean Active Devices : 3Working Devices : 3 Failed Devices : 0 Spare Devices : 0 Chunk Size : 512K Name : Wine:0 (local to host Wine) UUID : 2c8da2fd:7729efbd:5e414dd0:9cfb9f5f Events : 0 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 2 8 49 2 active sync /dev/sdd1

4、创建md0配置文件

[root@Wine ~]# echo DEVICE /dev/sd{b,c,d}1 >> /etc/mdadm.conf[root@Wine ~]# mdadm -Evs >> /etc/mdadm.conf [root@Wine ~]# cat /etc/mdadm.conf DEVICE /dev/sdb1 /dev/sdc1 /dev/sdd1

ARRAY /dev/md/0 level=raid0 metadata=1.2 num-devices=3 UUID=2c8da2fd:7729efbd:5e414dd0:9cfb9f5f name=Wine:0

devices=/dev/sdb1,/dev/sdc1,/dev/sdd15、格式化RAID分区

[root@Wine ~]# mkfs -t ext4 /dev/md0 mke2fs 1.41.12 (17-May-2010) 文件系统标签= 操作系统:Linux 块大小=4096 (log=2) 分块大小=4096 (log=2) Stride=128 blocks, Stripe width=384 blocks7864320 inodes, 31430656 blocks1571532 blocks (5.00%) reserved for the super user 第一个数据块=0Maximum filesystem blocks=4294967296960 block groups32768 blocks per group, 32768 fragments per group8192 inodes per groupSuperblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208, 4096000, 7962624, 11239424, 20480000, 23887872正在写入inode表: 完成 Creating journal (32768 blocks): 完成 Writing superblocks and filesystem accounting information: 完成 This filesystem will be automatically checked every 32 mounts or180 days, whichever comes first. Use tune2fs -c or -i to override.

6、添加开机自动挂载

[root@Wine ~]# blkid | grep /dev/md # 这里推荐使用UUID进行挂载/dev/md0: UUID="40829115-a1c5-4d5a-af4a-07225a4619fc" TYPE="ext4"[root@Wine ~]# echo "UUID=40829115-a1c5-4d5a-af4a-07225a4619fc /SoftRAID ext4 defaults 0 0" >> /etc/fstab# 添加挂载信息到/etc/fstab中[root@Wine ~]# mount -a;df -h # 查看系统挂载信息Filesystem Size Used Avail Use% Mounted on/dev/sda2 79G 9.6G 65G 13% /tmpfs 7.8G 72K 7.8G 1% /dev/shm /dev/sda1 190M 32M 149M 18% /boot /dev/md0 118G 60M 112G 1% /SoftRAID

删除软RAID

1、卸载挂载点

[root@Wine ~]# umount /dev/md0

2、停止软件RAID设备

[root@Wine ~]# mdadm -S /dev/md0mdadm: stopped /dev/md0

3、删除RAID中的磁盘

[root@Wine ~]# mdadm --misc --zero-superblock /dev/sd{b,c,d}14、删除mdadm配置文件

[root@Wine ~]# rm -f /etc/mdadm.conf

5、删除/etc/fstab中的挂载信息

以上即是在Linux常见的两种将多个硬盘合并容量的方法,仅作为参考。在现实环境中还是推荐使用硬件RAID,数据无价。

共同学习,写下你的评论

评论加载中...

作者其他优质文章